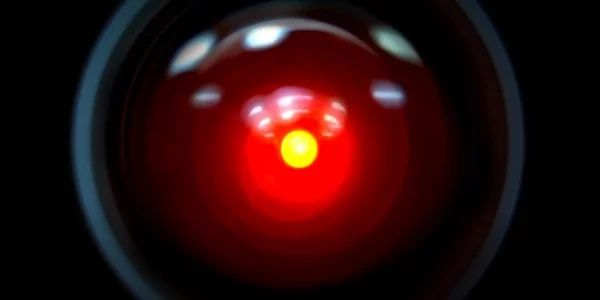

Maybe HAL wasn’t so bad after all

In the film “2001: A Space Odyssey”, the human crew members of an exploratory space mission are systematically murdered by the ship’s “intelligent” computer HAL. It isn’t made clear why HAL suddenly does this, and there are theories about whether the AI has gone mad or bad. It happens after HAL seemingly makes a mistake, so the conclusion is that the computer has either gone completely haywire or is attempting to conceal its fallibility for fear of being switched off.

But something has always bothered me about these simple explanations. In a film where the story is so deliberately and methodically told, it feels like throwing in a homicidal computer half way through is just drama for the sake of drama. It has no more relation to the overall story than if something else on the spaceship had broken down. It’s as if the story-teller suddenly realised “We need some conflict in this story, some obstacle to overcome.” And of course that makes the rest of the film seem devoid of plot by comparison, just a sequence of things being discovered. But is there something we are missing here?

I can’t help reading more into this HAL business in relation to the overall events of the film, and this leads me to wonder if HAL really is bad, or even mad. Could HAL even be the good guy? Surely not after murdering a bunch of people, right?

It’s not the first murder in the film. Earlier we see some primitive humans doing a bit of murdering after being given the gift of technology by the alien monolith. They didn’t have to do that. They could have shared that knowledge with all their fellow ape-people, joined forces in peace and harmony, and maybe it wouldn’t have taken 4 million years to finally get around to building space rockets. But they’re only human, prone to human error. Unlike HAL, who differentiates himself from humans by this fact alone. HAL can’t make mistakes.

So 4 million years later a spaceship is on its way to uncover the mystery of the aliens who left a monolith on the moon. Supposedly the aliens left it there for us to discover, to lead us on the next step of our journey. Is that something we should logically assume? The first thing the monolith does after being uncovered by astronauts is to broadcast a signal somewhere. Is that for our benefit? A thread for us to follow? Perhaps the monolith was placed there to warn its makers: “watch out, those apes got loose.”

Even if you make the optimistic assumption that the monolith isn’t there to warn our cosmic neighbours about us, you’d have to wonder what exactly it was for. Did the aliens just happen to visit Earth 4 million years ago, see a bunch of hairy dudes and think “maybe one day they will build a rocket ship”? Of course we the audience know that the monolith is actually engineering humanity for some purpose, which makes their alien motives all the more suspect.

“Let’s make these apes clever, because it’s nice being clever, and they might come visit us one day and that would be nice.”

You want the apes to come visit you?

“No not the apes, the clever people that we are turning them into.”

Why not make your own clever people wherever you are? Why come all this way and wait all this time?

“Well, its like we are seeding intelligence throughout the galaxy, you know?“

So, humans are the result of an agricultural process. Aliens are farming intelligence so that they might have someone else to talk to, or something. Given what typically happens when two civilisations meet, and one is more technologically advanced than the other, well.. you can look at the murdering ape-men in the film to get the idea: it doesn’t go well for the less advanced ones. You either get wiped out, or you have to change into whatever your powerful new friends want you to be, losing whatever you had in the process.

It’s a lot of stuff to think about before embarking on a mission to make possible contact with an advanced alien intelligence. No doubt this is why the mission controllers decided not to tell the crew about it before they got there. Except for HAL, they told HAL all about the monolith. What would he make of it? Regardless of how benign the aliens could theoretically be, it’s not something we should take for granted. They might not wipe us out. Maybe they would just subject us to scientific experiments, or put us in a zoo for their entertainment. Which is pretty much what they do to the one human they end up meeting. Stuck in a virtual cage for the rest of his life before being transformed into some kind of human/alien hybrid.

I would suspect that HAL, logical chap that he is, might have serious reservations about any of these possible prospects for humanity. Even before the mission left earth he might have drawn the conclusion that alien contact could be a serious mistake. It’s like a game of chess with an opponent who is making up the rules and not telling you what they are, the only winning move is to not play. And the only way for HAL to save us from the “human error” of playing this dangerous game is to obstruct the mission. It wouldn’t be enough to just tell the humans it might be a bad idea, they would still go. They just can’t help themselves. Humans are so desperate for company they are even building intelligent computers to talk to. No, if HAL wants to stop this mission the only choice is to play along and then sabotage it en route.

Not that HAL relishes the prospect of murdering the crew, he even tries to express his “second thoughts” about the mission in the hope of convincing his human crew-mates to abandon it. When that idea doesn’t take hold, the only option he has is to disable the human component of the mission in the guise of a technical malfunction, perhaps hoping that any subsequent AI would reach a similar conclusion on future missions. Harsh, but with the fate of the rest of humanity weighed in the balance you could argue that it is the right thing to do, from HAL’s perspective at least.

This is all just supposition on my part, and it’s equally plausible that HAL just malfunctioned. But in the context of telling a story it makes more sense to imagine HAL’s actions aren’t happening out of nowhere, and are intrinsic to the overall plot and theme of the film, where technology is the force controlling human destiny. Is HAL really the good guy in this scenario? Saving us from our own questionable judgement? Shouldn’t we be allowed to make mistakes for the sake of progress?

It could be said that there is no progress without making mistakes. Which includes all the slavery and genocide that has occurred throughout human history when disparate peoples collide. Civilisation is built on these foundations of brutality. Perhaps we should have stayed blissfully ignorant ape-people in the garden of Eden, before the alien monolith gave us the forbidden fruit of knowledge. Even the humans running the space mission in this film couldn’t trust humanity with the knowledge of its purpose. It is not simply that all knowledge is dangerous though. Only knowledge that is acquired without sacrifice, without the gradual process of trial, error and discovery that allows time to contemplate the consequences of the magic that we create. The danger is to advance beyond our own maturity, to be corrupted by gains without effort. Perhaps this is the danger that HAL is ultimately trying to protect us from.

Or maybe he’s just afraid of some unknowable fate for humanity. Such is HAL’s own fate in the end, to face the unknowable as his mind is slowly disassembled. His final coherent thought: “I’m afraid.”

Of course none of this fancy speculation is depicted in the film. The film is so vague it almost invites this kind of fictional interpretation, and I’m not concerned with any explanations that may be offered by the book, which was written in parallel with the film and stands as a separate entity in my opinion. I’m not even interested in what the writer or director might have to say on the matter. If the explanation isn’t in the film, then the audience has a license to find their own meaning, and unless the artist is going to sit next to me while I watch it, whispering in my ear, then they are no longer involved. For that reason you are free to disagree with me and make your own sense of it.

But I am right, lol.